The hidden costs of duplicate business partner records

Duplicate business partner records can have a significant impact on organizations, leading to hidden costs that can hinder operational efficiency and decision-making processes. But what exactly are the hidden costs associated with duplicates and their implications across various areas of business operation? By understanding them, you can take proactive measures to address duplicates and optimize your processes.

In the realm of data management, one challenge looms large: duplicate business partner master data records. These duplicates, scattered across various systems and databases, pose a substantial threat to organizations. Their existence compromises multiple business functions, resulting in increased costs, inefficient operations, and heightened compliance risks. In this article, we delve into the intricacies of this predicament, shedding light on the challenges faced in identifying and managing duplicate records, the risks they entail, and the costs they carry within.

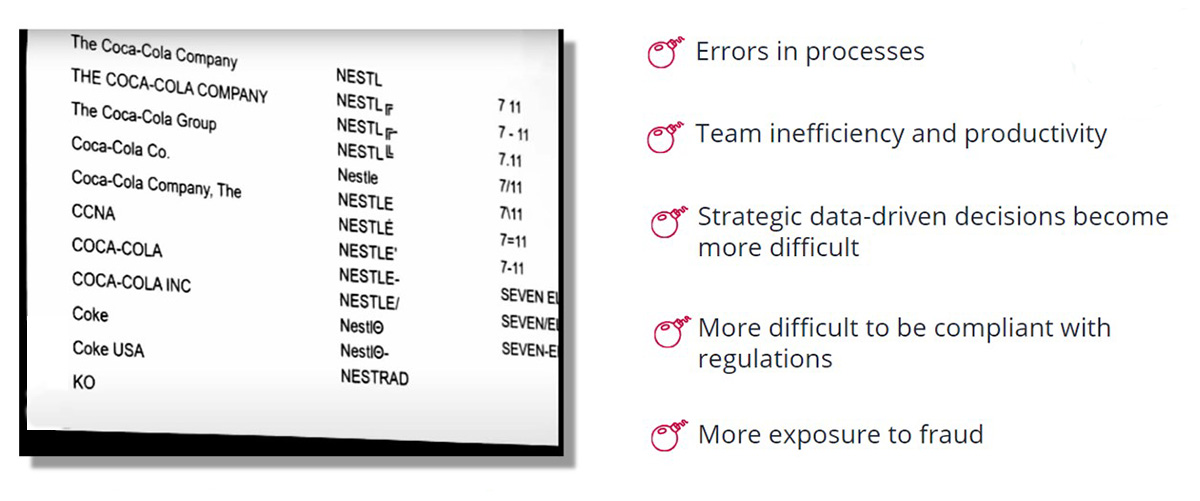

The impact of duplicates on business

Duplicate business partner records can have far-reaching consequences across various areas of business operations. Let's explore a few examples:

Compliance and Risk Management

Background checks for new business partners are crucial for compliance and corporate risk management. Duplicates not only multiply the workload for these checks but also increase the cost of risk assessments. Ensuring accurate and reliable data is essential to mitigate compliance risks effectively.

Credit and Vendor Risk Management

Managing relationships with business partners becomes more complex when duplicates exist. Internal instruments and blocking mechanisms should cover all instances of business partner records. Duplicates extend the exposure with customers, leading to potential confusion and inefficiencies in managing supplier relationships and spending analysis.

Reporting

Duplicates create challenges when it comes to group reporting and strategic decision-making. Without a clean master data foundation, organizations may struggle to obtain accurate insights, compromising their ability to make informed decisions. Reliable and consistent data is crucial for effective reporting and analysis.

Perils of inefficient duplicate identification

Efficiently identifying and managing duplicate business partner records presents substantial challenges. Let's explore the specific obstacles encountered when matching business partner names and addresses:

Matching Business Partner Names:

- Non-Standardized Representation: Names vary in their form, ranging from abbreviations to legal forms and uppercase or lowercase letters.

- Diverse Character Representations: Names can include characters from different scripts, such as Chinese, Cyrillic, or Latin, complicating matching processes.

- Misplaced Information: Inaccurate representation, where extraneous details like c/o information are added to the name instead of the relevant data attribute, hampers accurate matching.

- Acronyms vs. Full Names: Some instances utilize acronyms, while others employ full names, causing inconsistency in the matching process.

- Misspellings: Various forms of misspellings, such as added, omitted, replaced, or transposed characters, introduce further complexities.

- Inconsistent Name Component Order: The order of name components can vary, leading to inconsistencies in matching (e.g., "Lindner Hotel Hamburg" vs. "Hotel Lindner in Hamburg").

- Inconsistent Representation: Differences in the representation of the name across different data models add to the challenge of matching and deduplication.

Matching Business Partner Addresses:

- Misspellings: Errors in spelling cities or thoroughfares create obstacles in accurate address matching.

- Inconsistent Abbreviations: Lack of consistent usage of abbreviations (e.g., "Lindenstr." vs. "Lindenstrasse") adds complexity.

- Misplaced Information: Inclusion of c/o information or building details within thoroughfares, or inconsistent placement of house numbers, further complicates address matching.

- Missing Attributes: Variations in data systems may lead to missing attributes, such as building information, causing discrepancies in address matching.

- Original Names vs. International Names: Inconsistencies between original and international names of cities (e.g., "München" vs. "Munich," "Mailand" vs. "Milano") hinder accurate address matching.

- Varied Character Usage: The presence of different character scripts (e.g., Chinese characters) creates additional challenges in address matching.

- Semantic Ambiguities: Certain fields, such as post codes in Ireland (Eircode vs. GeoDirectory vs. Loc8 Code), pose semantic ambiguities, impeding precise address matching.

- Distinctions between Post Box and Street Addresses: Separate maintenance of post box and street addresses, or their coexistence within a single address data object, adds complexity to address matching

The ultimate no-go: the costs

Many companies develop strategies for identifying and addressing duplicate business partner data. But why exactly is it so critical to eliminate these duplicates? The answer is simple: duplicates cost companies a lot of money.

Process Costs

One of the key hidden costs of duplicate business partner records lies in the increased process costs. Identifying and managing suppliers and customers becomes a time-consuming task when duplicates are present. The identification process is triggered multiple times for the same business partner, multiplying the workload and straining resources. Moreover, as business relationships with partners grow over time, the process costs to remediate duplicate records in subsequent systems or processes tend to rise exponentially. This can lead to inefficiencies, delays, and increased labor efforts.

Data Acquisition Costs

Data enrichment, often relying on external commercial data providers, incurs significant costs when duplicates are involved. These providers typically charge on a quota or request basis, and with duplicates, the number of requests and data enrichment activities can explode. This not only adds financial burden but also increases the complexity of managing data sources and negotiating contracts with these providers. Managing and controlling data acquisition costs becomes crucial in avoiding unnecessary expenditures.

Data Analytics & Reporting

Duplicate business partner records can compromise the value and reliability of data used for analytics and reporting purposes. Analyzing and reporting on duplicate-ridden data can lead to misleading insights and inaccurate conclusions. To ensure the accuracy and integrity of reports, organizations are often forced to spend additional time and resources cleansing the data. Failing to address duplicates in data analytics and reporting can have far-reaching consequences, potentially influencing strategic decisions in procurement, marketing, and sales. Reliable data is the foundation for effective decision-making.

Calculating the impact

Consider a dataset with 1 million records, assuming a conservative 10% rate of duplicates. For each duplicate, let's estimate a 30-minute process cost to address the issue. With these assumptions, the calculation reveals a staggering 6250 days of work required to handle the duplicates, equivalent to 28 full-time employees. This calculation highlights the significant time and resources wasted on managing duplicates alone.

Data deduplication: how others deal with duplicates

In large merger and acquisition projects, data cleansing can be challenging. At CDQ, we specialize in smart data management solutions. For instance, we helped a client consolidate 1.3 million customer and vendor records in just 10 weeks. This process involved eliminating 80,000 duplicates and improving data quality across a million records. Our expertise ensures streamlined operations and reliable post-merger data. Partner with CDQ for efficient data consolidation and enhanced data quality in M&A projects.

Efficient duplicate management made easy at Sartorius

Knowing the complexities that arise when duplicates are present in SAP systems, especially when transactional data is involved, Sartorius was looking for a sustainable solution to mitigate duplicate-related risks: not only ensuring that every data defect could be addressed post-creation, but also onboarding clean, unique records into the system at the first instance.

Duplicate checks are seamlessly integrated into Sartorius system, running automatically in the background. The algorithm swiftly identifies potential duplicates, triggering a streamlined process. When a potential duplicate is detected, a work item is generated for manual review, ensuring accuracy and precision.

Sartorius’ focus remains on simplifying duplicate management. From automated detection to manual review, they provide a comprehensive solution that maximizes data integrity and minimizes workload. The process is expected to encompass additional use cases, providing a comprehensive and reliable solution for various data management needs.

Addressing the hidden costs

The hidden costs of duplicate business partner records pose a significant challenge for organizations across various business functions. From process costs to data acquisition expenses and compromised data analytics, duplicates impact operational efficiency and decision-making processes. By understanding and quantifying these hidden costs, organizations can take proactive steps to address duplicates, optimize their processes, and leverage data deduplication solutions to work with reliable data for strategic decision-making. Investing in robust data management practices and leveraging advanced solutions are essential to mitigate the impact of duplicates and drive long-term business success.

Get our e-mail!

Related blogs

Stepping out of silo thinking: Henkel’s data quality story

A refreshing look at how Henkel tackles an immensely complex data landscape: candid disussion with master data experts, Sandra Feisel and Stefanie Kreft.

The e-invoicing reality: the gateway is ready, but is your data?

Over the past decade, the EU has steadily shifted from encouraging electronic invoices to mandating them. And while the technology obviously plays an important…

How Henkel is turning master data quality into a service

Every now and then, you come across a project that makes you stop and think: “Now that’s how it should be done!” That’s exactly the case with Henkel and their…